Introduction

In the ever-evolving field of Artificial Intelligence (AI), deep learning has emerged as a progressive method that has enabled substantial breakthroughs in diverse domain sectors. Deep learning is comprised of neural networks, computational models inspired by using the human brain's neural connections. This blog's objective is to provide an in-depth understanding of deep learning, explore the workings of neural networks, and highlight their packages across different industries' setups.

Deep learning is a subset of machine learning that involves training synthetic neural networks to carry out specific tasks. Unlike traditional machine mastering algorithms that rely upon function engineering, deep learning models can routinely learn hierarchical representations of statistics through multiple layers of interconnected neurons. These deep neural networks are able to extract complex patterns and features from unprocessed records, making them notably effective in fixing tough problems.

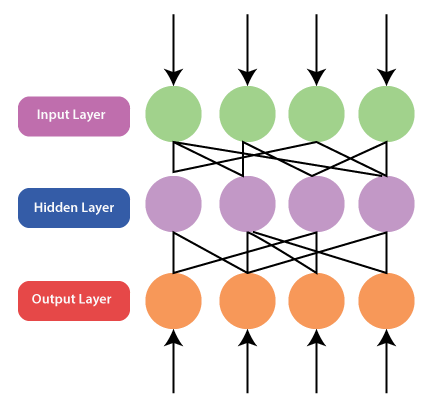

Neural Networks: The Building Blocks of deep learning

Neurons and Activation Functions: At the core of a neural community are synthetic neurons, also known as nodes or units. Each neuron takes some input, applies an activation characteristic, and produces an output. Common activation features consist of the sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent).

Layers: A neural network is commonly prepared into layers. The input layer receives raw facts, and the output layer provides the final predictions. There are one or extra hidden layers responsible for characteristic extraction and studying.

Weights and Biases: Each connection between neurons has related weights and biases. During the training/learning phase, those parameters are updated to decrease the difference between predicted and real outputs.

Forward Propagation: During inference, records skip through the neural network in a technique called forward propagation. Neurons in every layer have to process the input and bypass the output to the following layer till the very last prediction is made.

Backward propagation: To train a neural network, backpropagation is hired. It is an optimization set of rules that adjusts weights and biases by calculating gradients with recognition of the loss function. This technique minimizes the prediction errors and best-tunes the model.

Illustration of a simple neural network

Input layer: The input layer is the primary layer of the neural network, in which raw information is fed into the model. Each neuron within the input layer represents a function or dimension of the input data. The layer's primary feature is to receive and bypass the entered records to the subsequent layers for further processing.

Hidden layer: Hidden layers are middleman layers among the input and output layers. These layers play a vital function in extraction and representation learning. Each neuron in a hidden layer gets inputs from the preceding layer, does some processing using particular weights and biases, and then applies an activation feature to introduce non-linearity. Hidden layers are responsible for transforming the entered data into a format that allows the neural network to learn applicable patterns and relationships.

Output layer: The output layer is the final layer of the neural community, responsible for producing the predictions or consequences of the model. The number of neurons on this layer depends on the precise assignment the neural network is designed to clear up. For example, in binary classification, there is probably one neuron that uses a sigmoid activation feature to produce a probable value between 0 and 1. In multi-class categories, there are multiple neurons, every representing a specific class, and the activation characteristic is typically softmax to produce class probabilities. In regression functions, the output layer may additionally have a single neuron with a linear activation characteristic to generate continuous numerical predictions.

Applications of Deep Learning

Computer Vision: Deep learning has revolutionized computer imaginative and prescient tasks consisting of image classification, object detection, and facial recognition. State-of-the-art work models like CNNs (Convolutional Neural Networks) have executed incredible accuracy on complicated visible tasks.

Natural Language Processing (NLP): NLP responsibilities like sentiment analysis, system translation, and language technology have visible widespread improvements with the advent of deep learning models, inclusive of RNNs (Recurrent Neural Networks) and Transformers.

Speech Recognition: Deep learning has enabled massive improvements in speech recognition structures, making voice-managed assistants and transcription services more accurate and on hand.

Autonomous Vehicles: Deep learning is vital for self-driving vehicles because it enables them to process information from sensors, detect objects, and make actual-time driving selections.

Healthcare: Deep learning models have been implemented in clinical photo evaluation, ailment analysis, drug discovery, and personalized treatment guidelines.

Finance: In the financial sector, deep learning is used for fraud detection, algorithmic buying and selling, credit risk evaluation, and market forecasting.

The future of deep learning; holds several exciting possibilities

Transfer Learning: Transfer learning enables the capability to reuse expertise found from one task on related tasks, lowering the need for extensive training data.

Explainable AI: Researchers are actively operating on making deep mastering models greater interpretable, growing knowledge and information in their selections.

Hybrid Models: Combining deep learning with other AI techniques, including symbolic reasoning, will achieve more flexible and efficient AI systems.

Generative Adversarial Networks (GANs) Advancements: GANs have proven exceptional abilities in generating practical records, including images, music, and textual content. Future developments could result in even greater sensible and diverse outputs, commencing new opportunities in innovative programs and facts augmentation.

Robust and Adversarial AI: Research specializing in improving the robustness of deep learning models towards adverse assaults is ongoing. Advancements in this area will be essential in deploying AI structures adequately and securely, especially in vital programs like autonomous vehicles and cybersecurity.

Conclusion

Deep learning and neural networks have propelled the field of AI to new heights, enabling groundbreaking achievements throughout various industries. By mechanically mastering representations from data, deep learning models have outperformed conventional algorithms in complicated duties like image recognition, natural language processing, and autonomous vehicles. While there are still some challenges, ongoing studies, and technological advancements are continuously pushing the boundaries of what deep learning can obtain. Taking a glance at the future, the capability and impact of deep learning on our society are boundless, promising a global in which AI will be an indispensable tool for human progress.